albator wrote:Last time i checked, it comes with tenth of examples that you can tune right away and that you can display right after you compile the bins. All in real time (ok, depends on the resolution you use)

You can even run it on CPU (optix prime) if you want to integrate it in your C++ code. You don't need to use program in CUDA. (you still need a NVIDIA GPU though...)

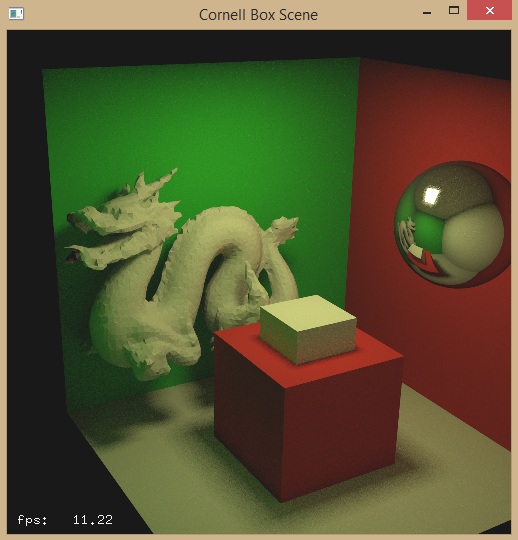

Yes ive worked with Intel Embree and Nvidia Optix, in fact i did two implementations using those frameworks to accelerate ray traversal mostly, i might release them on a github or something, even though their documentation will be lacking.

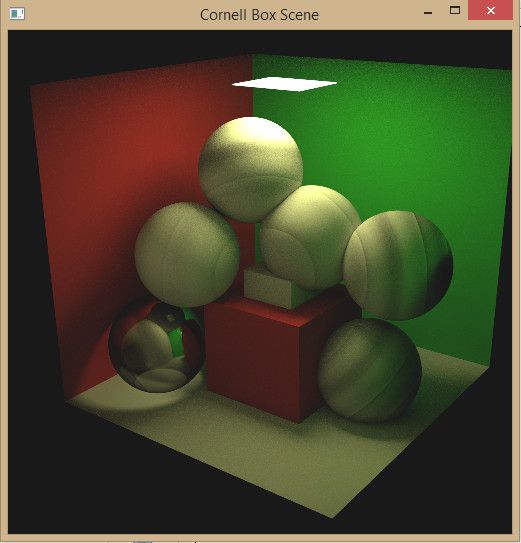

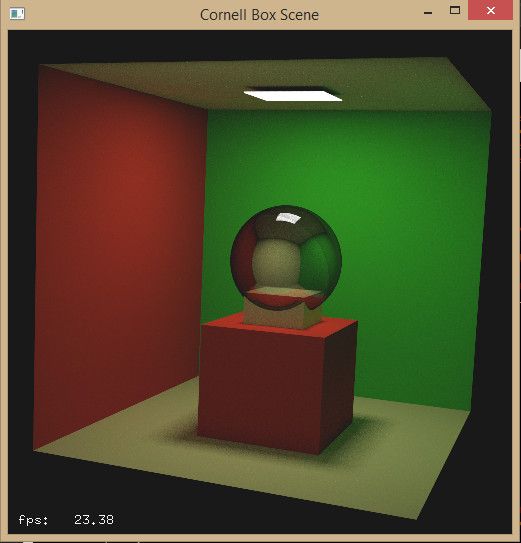

In terms of resolution im rendering 1024 x 1024 pixels with depth 8 for path's being emitted for each pixel index.

Yes Optix can use and leverage the CPU, but it's mostly used for calling compiled CUDA from PTX files and run those instructions on the GPU. You can even Mix an Optix context with a Cuda context to get that extra programability. Optix can be used as a post processing engine together with a opengl application, it's quite cool in fact.

PicassoCT wrote:Question:

When in motion - does it have the same graininess as in this video?

https://www.youtube.com/watch?v=jUIOQm4UIuo

If yes, could a simple blur fragment by motion direction of already rendered pixels help?

Also can you warp the lightphysics, producing lenses and blackholes?

Yes noise is indeed the biggest drawback when it comes to global illumnination algorithm's, especially because on mathematical therms, the variance of the pixel estimators is essentially shown as noise in the images, and if you want to cut an algorithm's quality to make it faster you usually cut and simplify terms on the estimator which result on more noise.

Granted mine doesn't have that much noise, because i consider a frame as the full execution of a high quality render, in that video he cut the quality by extreme amounts maybe not even rendering every single pixel in the screen as he rotates/moves the camera something that i could do yes to obtain a steady fps, i render all pixels and obtain at least a depth of 8 in traversing the ray's trough my scene.

it still has some degree of noise because light ray's reach surfaces and give them different contributions so there are areas on the scene with more ray's hiting them than others.

If i understood you correctly yes you can warp light ray's, since essentialy the goal of all these algorithm's is to have path's reach light sources from the camera eye and vice versa, and then get a color contribution from those path's.

The way these path's are created and interacted with scene geometry can be altered to create blackholes in a sense, so it's dependent on what you want to do to a ray when it hits a geometry for example, currently i reflect it or refract it when it hit's a geometry that i want and then i just store in that ray the type of intersected material, i could create a sort of black whole object and warp ray's as they hit that surface.

A simple post processing shader bluring can work versus emiting more samples, it would reduce render fidelity but i could stop the bluring once enough samples have been made.

There are multiple ways to reduce noise that i havent even looked into.